Wherobots Apache Airflow Provider¶

Wherobots offers an Apache Airflow provider that enables Wherobots Job Runs and SQL Sessions to be orchestrated from Apache Airflow DAGs.

These features are particularly useful for automating spatial ETL workloads.

Benefits¶

You can use Airflow to streamline, automate, and manage complex ETL workload tasks that are running on your data.

For example, you might want to perform your training and inference on large datasets in batches rather than all at once due to potential efficiency increases. Airflow can schedule these batch jobs to run at specific time intervals or trigger those batch jobs based on events like the arrival of new data.

Before you start¶

Before installing the Wherobots Apache Airflow Provider, ensure that you have the following required resources:

- Python version ≥ 3.8

- Wherobots API key. For more information, see API keys in the Wherobots documentation.

- Apache Airflow. For more information see, Installation of Airflow in the Apache Airflow documentation.

Setup¶

Install from PyPI¶

You can install the Wherobots Apache Airflow provider through PyPI with

pip:

pip install airflow-providers-wherobots

Or add it to the dependencies of your Apache Airflow application.

Create a new Connection in Airflow Server¶

You first need to create a Connection in the Airflow Server. There are two ways to create a connection: through the CLI or through the UI.

Create through CLI

You can create the connection through the Apache Airflow CLI.

Execute the following from your command line, replacing $(< api.key) with your Wherobots API key.

$ airflow connections add "wherobots_default" \

--conn-type "generic" \

--conn-host "api.cloud.wherobots.com" \

--conn-password "$(< api.key)"

These commands should return a link to the Airflow Server. For more information, see Managing Connections in the Apache Airflow Documentation.

Create through UI

Or you can create the connection through the Apache Airflow UI following the steps below:

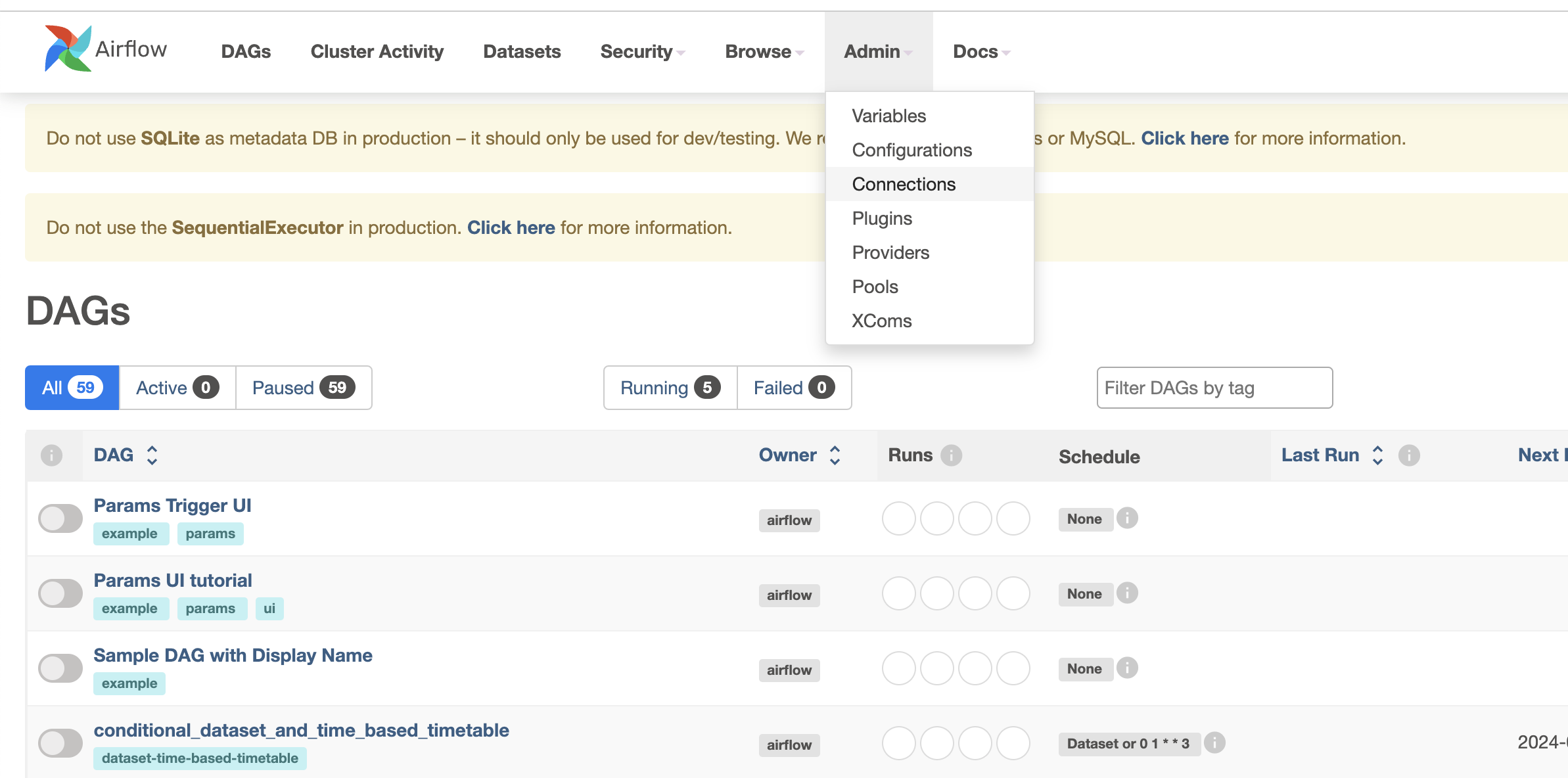

- Navigate to your Apache Airflow UI home page. click the

Admintab on the top right corner, and selectConnections.

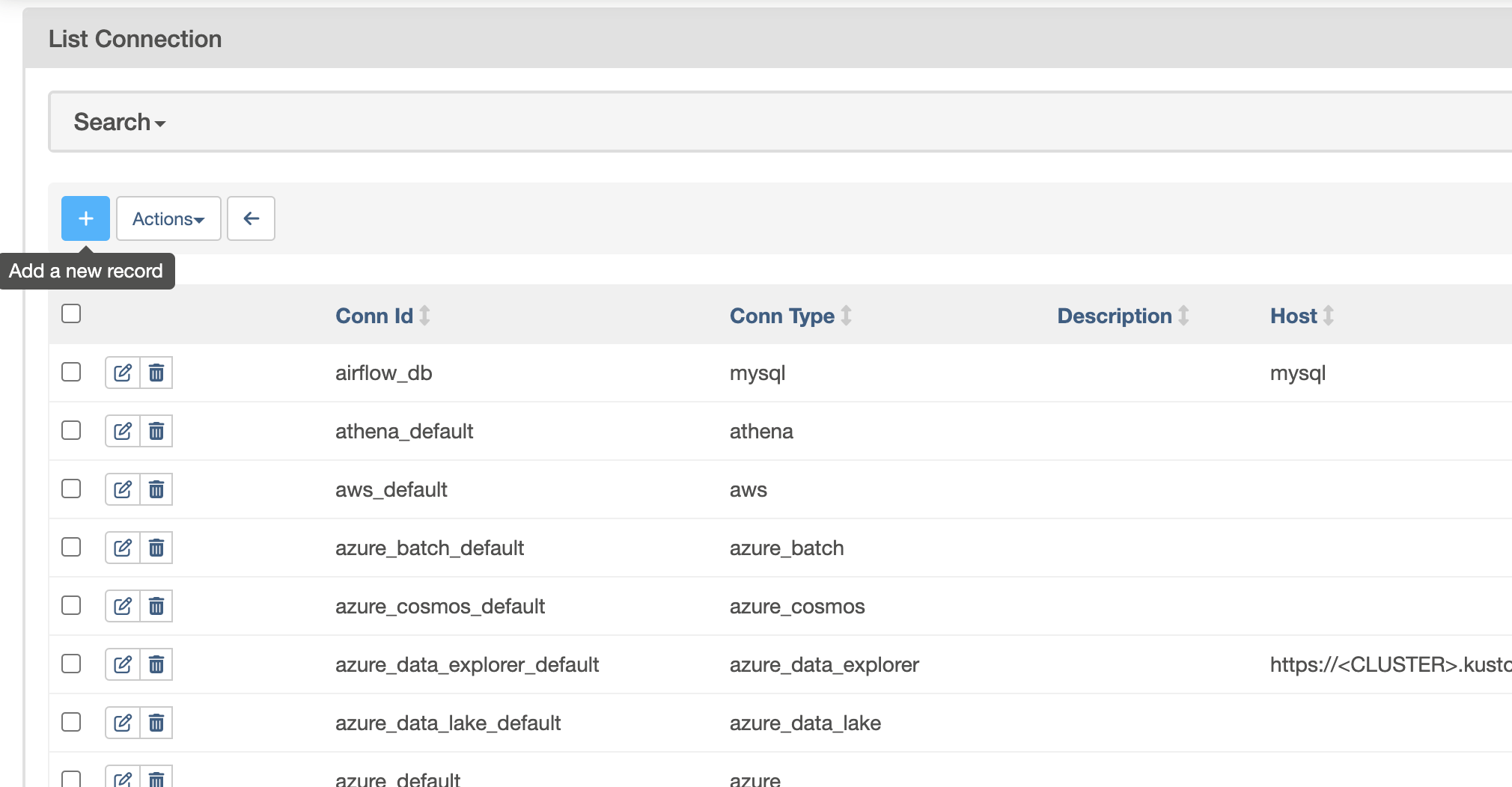

- Click the

+button to add a new connection.

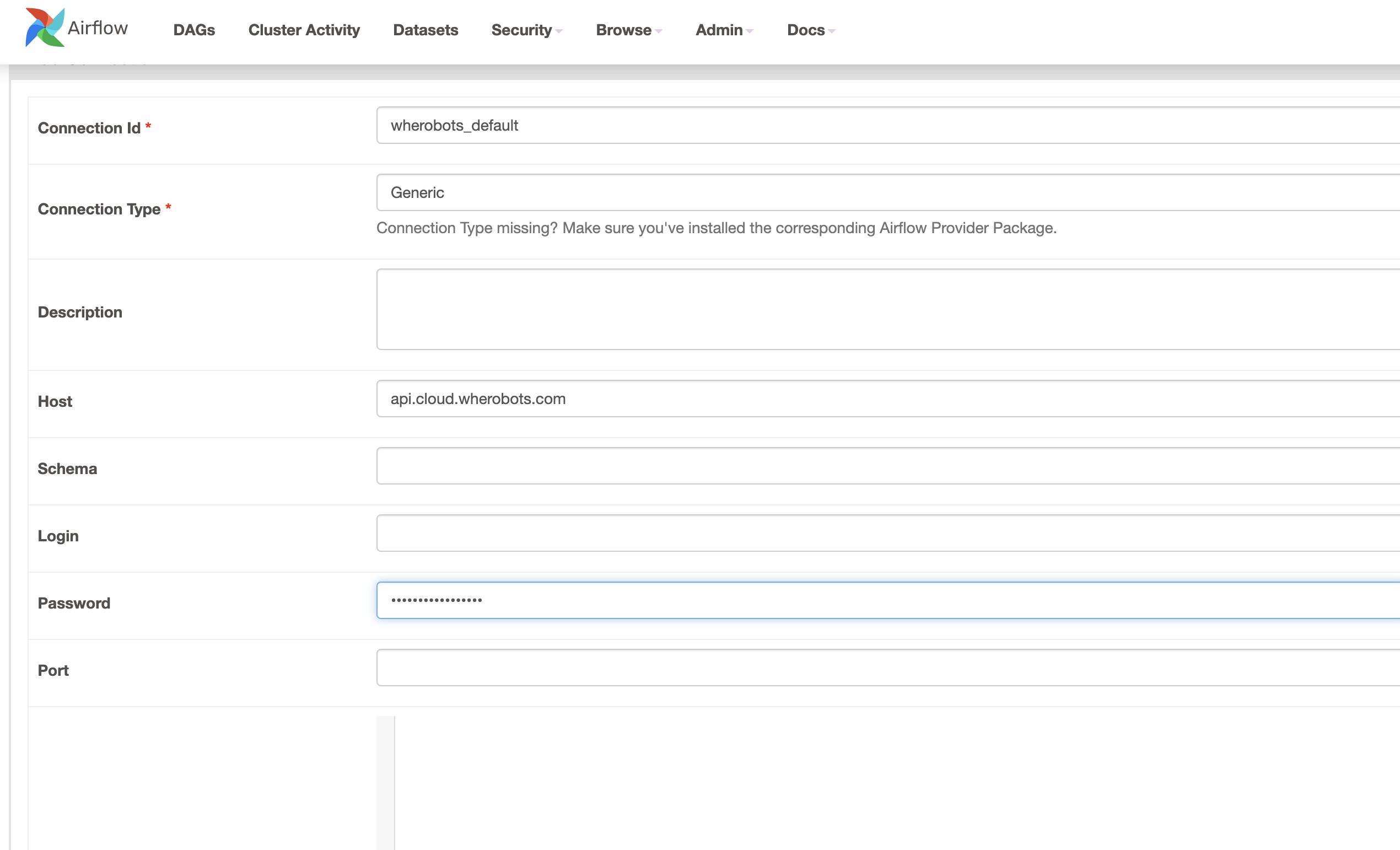

- Fill in the connection details:

- Connection ID:

wherobots_default - Connection Type:

generic - Host:

api.cloud.wherobots.com - Password: Your Wherobots API key

- Click

Saveto confirm the connection.